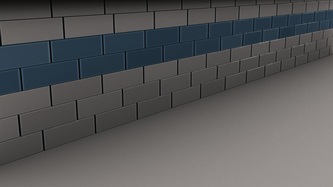

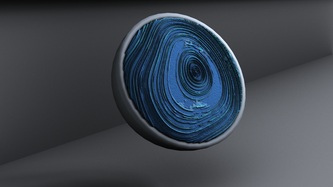

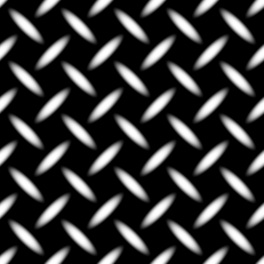

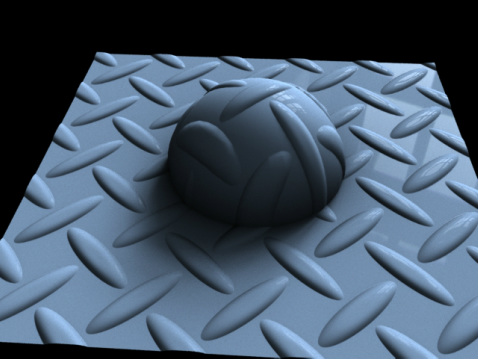

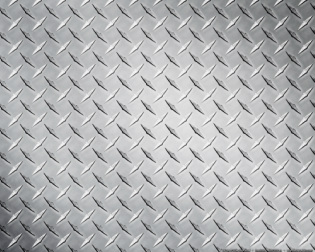

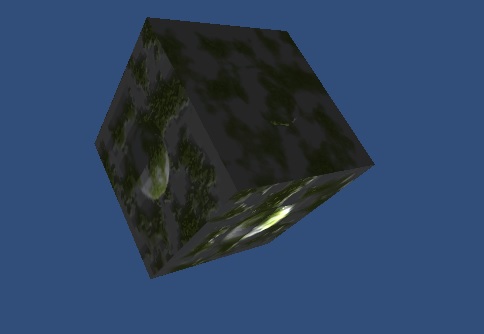

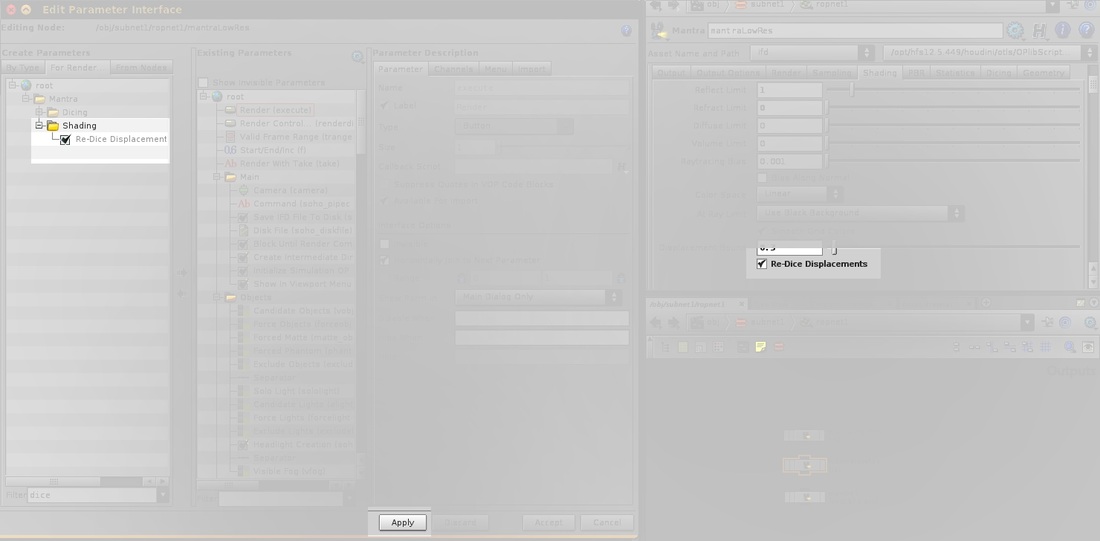

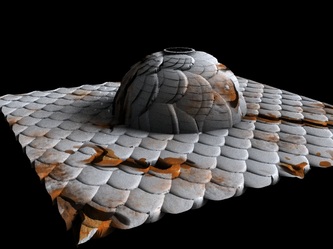

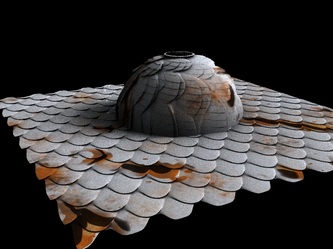

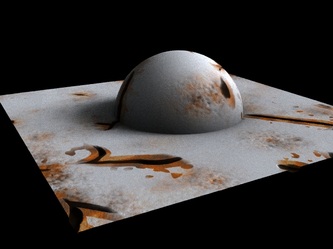

Dabbling in VEX shaders gives the possibility for textures based on faces rather than UVs, but one must have access to the face id for this to happen.

Voilà! In view, a humble vaudevillian VEX, cast vicariously as both victim and villain by the vicissitudes of fate.

Voilà! In view, a humble vaudevillian VEX, cast vicariously as both victim and villain by the vicissitudes of fate.

int $fface = getprimid();

Yes, it took longer than I care to admit to find that line of code.