As I understand it, this is going to be a shader that can output diffuse, height, normal, ambient occlusion and hopefully a rust pass. There will be noise implemented to simulate dirt and scuff marks. The drips will probably have to be some sort of fluid sim that changes the point information on the model to output a kind of mask. This shader will be encompassed as an otl.

But wait. That would make an extremely heavy otl wouldn't it? So as I figure out what nodes and settings to use in the shader, I will be creating python script that will go with the otl to create the nodes when the user pushes a button. Do they want a displacement pass? Check a box and the script should create/modify the rop to output a file, as well as add some sort of displace along normals node, a texture map and a noise for the user to play with.

To start off with this first week, I will make a simple shader that has a color on it that is added to a simple scene. From this scene I will output a color map, just the straight color meaning that the color needs to be emitted out, and a height map for displacement. After I figure that out, I will put that into an otl and make sure that transferring the shaders from one scene to another with the otl works, then I will implement the shaders in python. Hooray for python!!!

But wait. That would make an extremely heavy otl wouldn't it? So as I figure out what nodes and settings to use in the shader, I will be creating python script that will go with the otl to create the nodes when the user pushes a button. Do they want a displacement pass? Check a box and the script should create/modify the rop to output a file, as well as add some sort of displace along normals node, a texture map and a noise for the user to play with.

To start off with this first week, I will make a simple shader that has a color on it that is added to a simple scene. From this scene I will output a color map, just the straight color meaning that the color needs to be emitted out, and a height map for displacement. After I figure that out, I will put that into an otl and make sure that transferring the shaders from one scene to another with the otl works, then I will implement the shaders in python. Hooray for python!!!

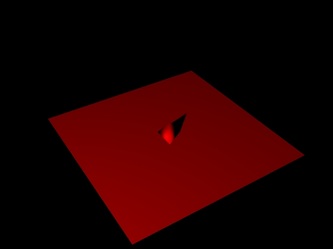

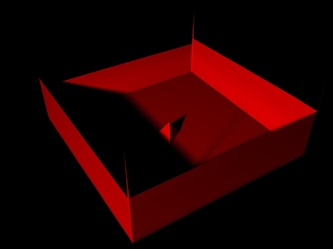

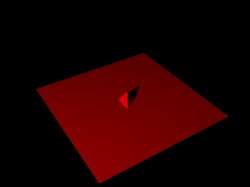

The left image is the original scene, the right is a grid with displacement and color mapped onto it. The displacement map was generated by a depth pass. Once this map was piped in, the ty from the original render cam that is gathering all the information was subtracted from the image and then the image was inverted.

I don't understand where the "wall" is coming from. There are errant pixels somewhere in the render.

I don't understand where the "wall" is coming from. There are errant pixels somewhere in the render.

I ended up scaling the uvs up 0.02 and translating them -0.01 to get rid of those pixels. I don't think that this is the ideal way to do things but it works and the triangle does not move.